Stop Rejecting Your Best Engineers.

Why the “FAANG Standard” is Systematically Selecting “Puzzle Performers” Over True “Problem Solvers.”

📊 Every assertion below has a cited source — jump to the reference deck.

Google: 90% of our engineers use your software (Homebrew), but you can’t invert a binary tree on a whiteboard, so get out!

This is the infamous—and true—story of Max Howell, creator of Homebrew. my 15+ years as an Tech Director at Intel, Microsoft and Arm, I’ve seen this absurd ‘false negative’ play out time and time again.

This story is jarring because it exposes the core anxiety every tech leader faces: while we spend enormous capital and race against project deadlines, is the very “filter” we rely on systematically rejecting the A-level problem-solvers we desperately need?

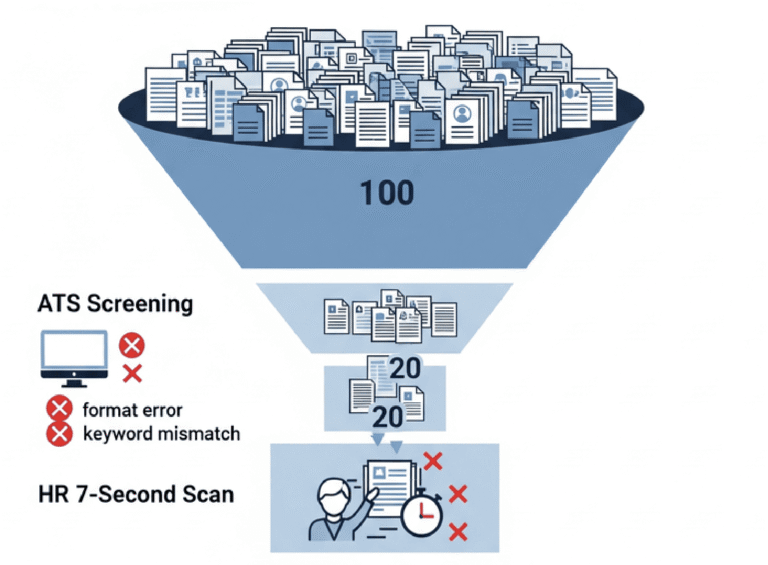

Fig. 1: rejecting the A-level problem-solvers we desperately need?

Your next great hire is likely being rejected because of a whiteboard problem that has zero correlation with performance, and it’s costing you dearly. This isn’t just theory

This isn’t just theory. Research shows an unfilled technical role costs a company an average of £500 per day. That is the price you are paying.

The Diagnostic Failure: Why Your “Algorithm” Filter Measures Anxiety, Not Competence

We’ve fallen for a collective delusion: that “puzzle-solving” equals “engineering capability.”

During my time at MSFT and Arm, we had long suspected that “clever puzzles” didn’t filter for good engineers. Google’s later internal data proved our suspicions correct: former SVP of People Ops, Laszlo Bock, revealed that after analyzing tens of thousands of interviews, the correlation between these brain teasers and actual job performance was “zero”.

Bock was blunt: “They’re a complete waste of time… They serve primarily to make the interviewer feel smart.”

Fig. 2: They serve primarily to make the interviewer feel smart

Why? Because LeetCode tests the ability to find a single optimal solution in a perfect, closed environment. Worse, it’s a fundamentally flawed measuring instrument. Research from NC State confirms that the stress of a traditional technical interview causes candidate performance to drop by over 50%… This proves whiteboard interviews don’t measure programming ability; they measure “performance anxiety”.

I still remember interviewing a brilliant, highly senior engineer. He was so nervous at the whiteboard he was visibly shaking, fumbling a simple for-loop. I pulled him into a conference room with just a pen and paper. Thirty minutes later, he had produced one of the most elegant system designs I’d ever seen.

What does the real business battlefield require? Handling ambiguity.

A diagnosis from Microsoft Researchidentifies this as a combination of two key traits: being “Self-reliant” (solving complex, novel problems with limited guidance) and the ability to “Execute” (knowing when to stop analyzing and start building, avoiding “analysis paralysis”).

LeetCode rewards the perfect solution before analysis paralysis. The real world rewards execution despite ambiguity. This confirms your mechanism isn’t filtering for “practitioners”; it’s rewarding “puzzle performers.”

Replace “Algorithm Theater” with “Practical Competence Assessment”

My advice is blunt: stop the “algorithm theater.” As companies, we don’t need “actors”; we need “craftsmen.”

The solution is to shift to “practical competence assessment” that simulates the real work. The data contrast is staggering. A traditional “unstructured interview” has a pathetically low predictive validity, with a correlation coefficient of just r = 0.20-0.38.

However, a 2023 IEEE Software Journal study that when “Take-Home Programming Tests”—an asynchronous method simulating a real project—were used, the correlation with “first-year job performance” skyrocketed to r = 0.62.

This isn’t surprising to me in the slightest. The latter (Take-Home) measures “Craft,” while the former (LeetCode) merely measures “Memory.”

Fig 3: LeetCode vs. Take-Home

The same study found the correlation with “maintainable production code” was even higher, at r = 0.67.

This confirms what I’ve seen work: the closer the test is to the actual job, the stronger its predictive power. The core of the assessment model I built is this: “asynchronous projects,” “debugging challenges,” and “trade-off conversations.”

These mechanisms work because they deliberately simulate the “uncertainty,” “ambiguous requirements,” and “communication costs” of a real job. They force a candidate out of the whiteboard’s vacuum to truly demonstrate their “trade-off decisions” and “engineering mindset”—the true markers of Ownership.

Recouping the “False Negative Cost”: Building a High-Ownership Team

When you stop using the wrong ruler (LeetCode) to measure the wrong thing (puzzle-solving), you stop rejecting A-Players like Max Howell.

For you, the Engineering VP, this means you can finally identify talent that demonstrates true “Craftsmanship”.

This model filters for people who… know the “difference between ‘getting it done’ and ‘getting it right.'” They “don’t take shortcuts.” Critically, they “don’t stop caring” after the code is checked in; their Ownership extends to deployment and maintenance.

This is my definition of an A-Player.

Fig. 4: New definition of an A-Player

This is the real ROI companies need: a team of problem-solvers who can deliver maintainable code in the face of ambiguity, not a team of algorithm test-takers.

You’re reclaiming the A-Players your old mechanism was systematically filtering out.

Is Your Assessment Mechanism Working For You, or Against You?

My core belief is this: The greatest cost of hiring isn’t the “False Positive” (a bad hire). It’s the “False Negative” (a great hire you missed).

As an Engineering VP or Hiring Manager, your challenge isn’t to design harder puzzles. It’s to answer this: is your current assessment mechanism filtering for talent, or is it systematically rejecting it?

I urge you to re-examine your entire interview process. Is it measuring “puzzle-solving,” or is it assessing “practical engineering competence”?

Have you had a “near-miss” experience where you almost rejected an A-Player? Or do you remember a candidate who failed the whiteboard but thrived elsewhere?

As a “practitioner”: Have you ever been the “Max Howell” in the room? Did you lose a massive opportunity because of one broken, irrelevant question?

Share your diagnosis in the comments—how many A-Players has your old mechanism cost you?

P.S. For VPs, Directors, and HR Heads: If your team is also facing critical bottlenecks in talent assessment and retention, my DMs are always open for a peer-to-peer discussion. I’m always happy to exchange war stories. My Linkedin Page (https://bit.ly/3X1Y2lS)。

Reference

Primary Citations

- [R1] Resonance Data/Insight: “Max Howell (creator of Homebrew)’s ironic case: ‘Google: 90% of our engineers use your software (Homebrew), but you can’t invert a binary tree on a whiteboard, so get out.'” Open Link [https://www.quora.com/Whats-the-logic-behind-Google-rejecting-Max-Howell-the-author-of-Homebrew-for-not-being-able-to-invert-a-binary-tree]

- [KRQ-1] The cost of delayed hiring – Use this formula to see how much it’s really costing you. Open Link [https://rsnwltd.com/recruiting/the-cost-of-delayed-hiring-use-this-formula-to-see-how-much-its-really-costing-you]

- [P1] Mechanism Debunk: “Google Data: Brain teasers show zero correlation with job performance” LinkedIn Source Business Insider [https://www.linkedin.com/pulse/20130620142512-35894743-on-gpas-and-brain-teasers-new-insights-from-google-on-recruiting-and-hiring] [https://www.businessinsider.com/google-brain-teaser-interview-questions-dont-work-2015-10]

- [KRQ-2] Unstructured Interviews: Correlation coefficient r = 0.20-0.38, The Validity of Employment Interviews: A Comprehensive Review and Meta-Analysis Open Link [https://home.ubalt.edu/tmitch/645/articles/McDanieletal1994CriterionValidityInterviewsMeta.pdf]

- [KRQ-3] Tech Sector Job Interviews Assess Anxiety, Not Software Skills Open Link [https://news.ncsu.edu/2020/07/tech-job-interviews-anxiety/]

- [S1] Solution Case: “Take-Home Coding Tests: Correlation with first-year performance review r = 0.62” Open Link [https://fullscale.io/blog/take-home-coding-tests-vs-live-coding-interviews/]

- [P2] What Makes A Great Software Engineer? Behavioral Diagnosis: “Handling Ambiguity: Self-reliant, completes complex and novel problems under limited guidance” Open Link [https://www.microsoft.com/en-us/research/wp-content/uploads/2019/03/Paul-Li-MSR-Tech-Report.pdf]